As we all know, network switches are always a little bit noisy. Many people are bewildered by this problem. In fact, the noise mainly comes from the multiple fans that operate from within the network switch, in order to cool the various components of the switch from within. Considering the certain situation when some SMB might prefer having a fanless network switch, FS now has introduced a brand new Ethernet access switch: this fanless switch is designed to meet the silent and cost-effective requirement for SMB customers. Let’s take a closer look at this energy-saving S2800-24T4F fanless switch.

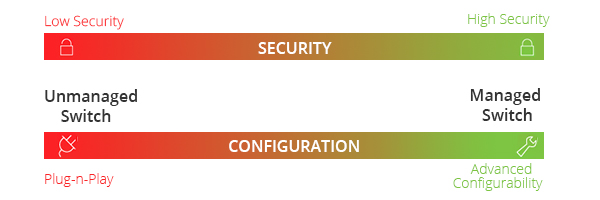

In some scenarios, operating fans within the network switches is inevitable. It is the reason that the switches emanate so much heat, especially when multiple network switches are locked up into a rack along with many other active devices. At this time, fans play a important role to help cool various components within the network switch. However, the constant noise coming from the fans within the switch might be disturbing to everyone around the switch. In such situation, people might prefer to the fanless switches. Apart from being quiet, these switches are more reliable and utilize less power than their fan-cooled counterparts. Fanless design might be purposefully incorporated into the switches to increase their reliability. These switches are come with solid-state cooling apparatus instead of fans that help cool the various parts inside the switch and hence provide a higher degree of reliability.

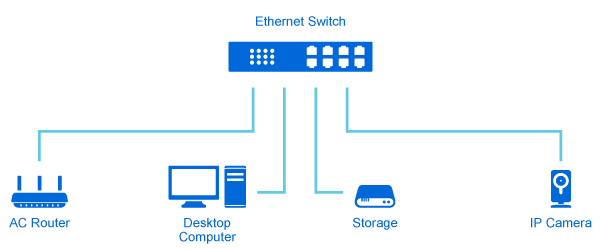

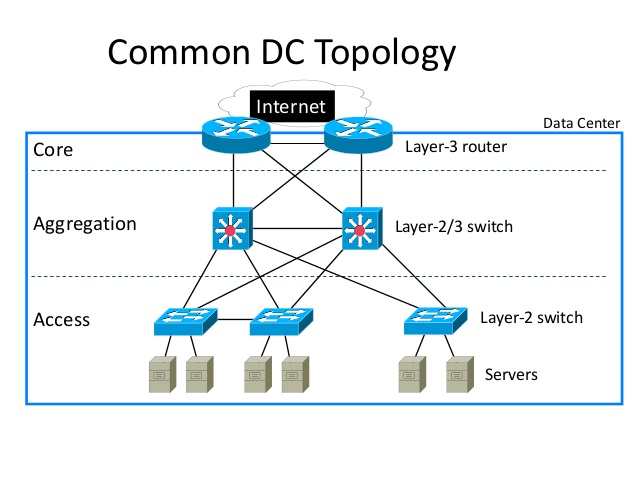

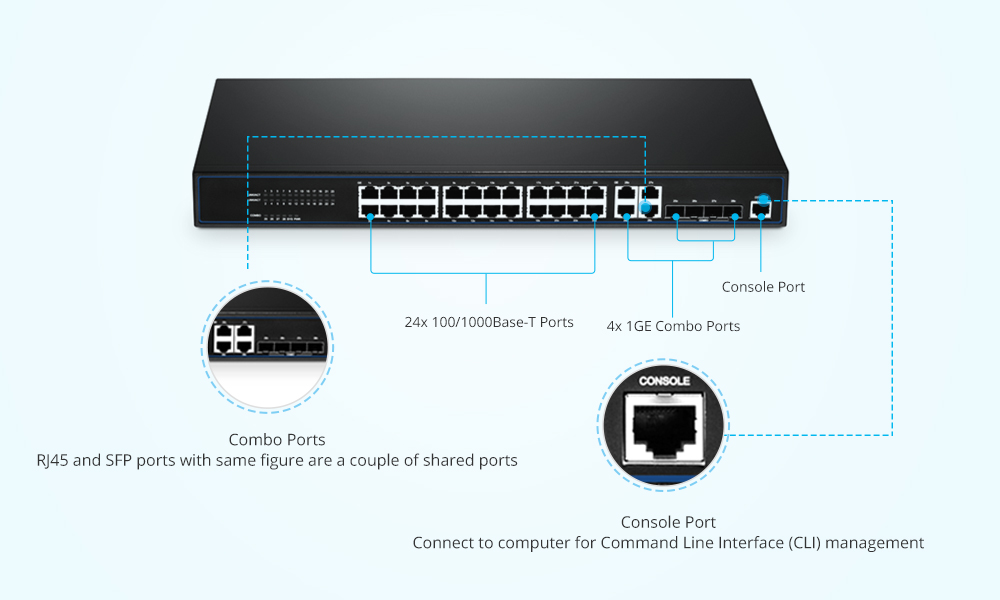

FS S2800-24T4F is a kind of fanless and energy-saving Ethernet access switch, which is designed to meet the demand of cost-effective Gigabit access or aggregation for enterprise networks. For the advantage of silent and cost-saving design, it is perfect to use in SMBs, labs, schools and Internet cafes. In addition, it offers flexible port combination form to facilitate user operations as the result of the equipped 24×100/1000Base-T ports and 4x1GE Combo SFP ports. So you can directly connect to a high-performance storage server or deploy a long-distance uplink to another switch. Moreover, S2800-24T4F supports multiple configuration modes to make it easy for network management and maintenance. Also, high performance processor is adopted to provide full speed forwarding and line-dormant capacity to offer customs multitudinous service features.

Highlights & Benefits

- Layer 2 Full Wire Speed Gigabit Forwarding Capability.

FS S2800-24T4F has up to 48Gbps backplane bandwidth and 42Mpps packet forwarding rate. And its performance will be not impacted by ACL / binding / attack protection and other functions.

- Function Optimization for WEB Configuration of Internet Bar.

Customers can configure the port automatically or manually, and secure their network through using its IP+VLAN+MAC+Port binding functions.

- Perfect Management and Maintenance.

The Web management interface of S2800-24T4F has been optimized for enterprise users, supporting SNMP, Telnet, and cluster. Looback port loopback detection and LLDP neighbor detection functionalities have also been provided.

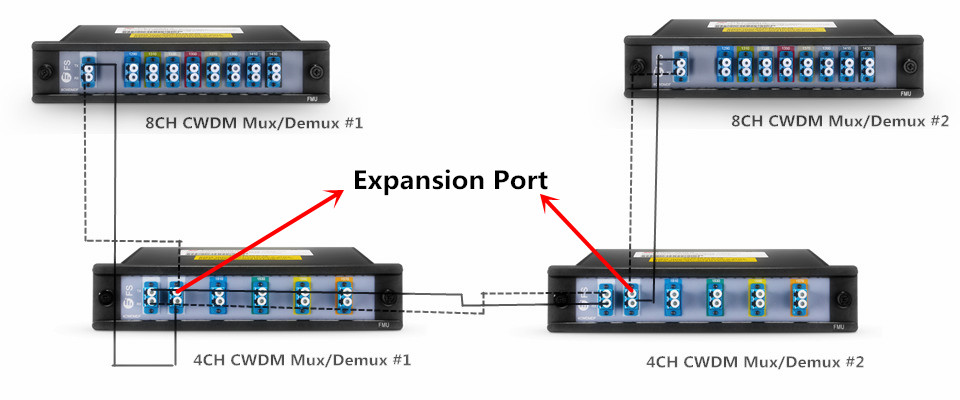

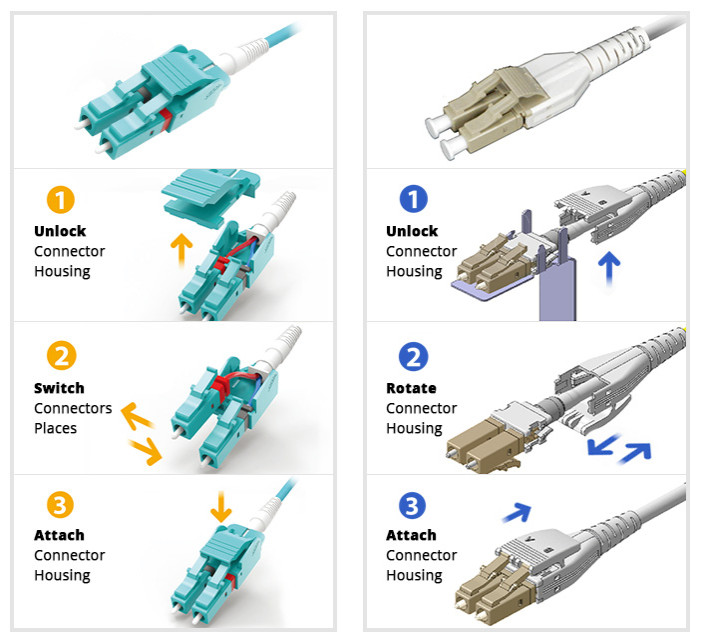

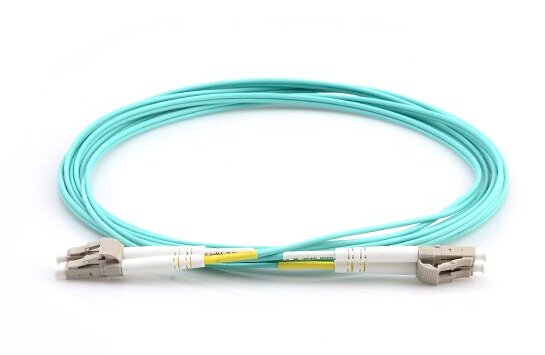

As being mentioned, the FS S2800-24T4F has 24 24×100/1000Base-T ports to achieve network connectivity. For these ports, you can use 100BASE SFP, 1000BASE SFP, BIDI SFP, CWDM SFP, DWDM SFP optical transceiver or 1000BASE-T SFP copper RJ-45 transceiver to achieve the link. FS provides many high-quality compatible SFP modules for S2800-24T4F fanless switch.

The main compatible SFP optical modules are listed in the chart below:

| FS.COM P/N | Part ID | Type | Wavelength | Operating Distance | Interface | DOM Support |

| SFP-FB-GE-T | 37767 | 100BASE-T | / | 100 m | RJ-45, Cat5 | No |

| 37769 | 10/100BASE-T | / | 100 m | RJ-45, Cat5 | No | |

| SFP-GB-GE-T | 20036 | 10/100/1000BASE-T | / | 100 m | RJ-45, Cat5 | Yes |

| 20057 | 1000BASE-T | / | 100 m | RJ-45, Cat5 | Yes | |

| CWDM-SFP1G-ZX | 23807 | 1000BASE-CWDM | 1270 nm | 80 km | LC duplex,SMF | Yes |

| 47123 | 1000BASE-CWDM | 1290 nm | 80 km | LC duplex,SMF | Yes | |

| 47124 | 1000BASE-CWDM | 1310 nm | 80 km | LC duplex,SMF | Yes | |

| 47125 | 1000BASE-CWDM | 1330 nm | 80 km | LC duplex,SMF | Yes | |

| 47126 | 1000BASE-CWDM | 1350 nm | 80 km | LC duplex,SMF | Yes | |

| 47127 | 1000BASE-CWDM | 1370 nm | 80 km | LC duplex,SMF | Yes | |

| 47128 | 1000BASE-CWDM | 1390 nm | 80 km | LC duplex,SMF | Yes | |

| 47129 | 1000BASE-CWDM | 1410 nm | 80 km | LC duplex,SMF | Yes | |

| 47130 | 1000BASE-CWDM | 1430 nm | 80 km | LC duplex,SMF | Yes | |

| 47131 | 1000BASE-CWDM | 1450 nm | 80 km | LC duplex,SMF | Yes | |

| 47132 | 1000BASE-CWDM | 1470 nm | 80 km | LC duplex,SMF | Yes | |

| 47133 | 1000BASE-CWDM | 1490 nm | 80 km | LC duplex,SMF | Yes | |

| 47134 | 1000BASE-CWDM | 1510 nm | 80 km | LC duplex,SMF | Yes | |

| 47135 | 1000BASE-CWDM | 1530 nm | 80 km | LC duplex,SMF | Yes | |

| 47136 | 1000BASE-CWDM | 1550 nm | 80 km | LC duplex,SMF | Yes | |

| 47137 | 1000BASE-CWDM | 1570 nm | 80 km | LC duplex,SMF | Yes | |

| 47138 | 1000BASE-CWDM | 1590 nm | 80 km | LC duplex,SMF | Yes | |

| 47139 | 1000BASE-CWDM | 1610 nm | 80 km | LC duplex,SMF | Yes |

Ethernet access switch has become an integral part of networking because of the speed and efficiency with which they handle data traffic. At FS, we know very well how much our small and medium-sized clients need a reliable and affordable Ethernet switches. So we come with this S2800-24T4F fanless switch for you, which is assured with high-quality and a one-year limited warranty, including any quality problems during the free maintenance. Besides, all of FS.COM’s transceivers are tested for 100% functionality and guaranteed compatible for outstanding network performance. So does the above SFP transceivers, they are completely applicable to this S2800-24T4F switch. Compared to the other vendors’ optical modules, they are much cheaper. For more details, please visit www.fs.com.