Data center layout design is a challenging task requiring expertise, time, and effort. However, the data center can accommodate in-house servers and many other IT equipment for years if done properly. When designing such a modest facility for your company or cloud-service providers, doing everything correctly is crucial.

As such, data center designers should develop a thorough data center layout. A data center layout comes in handy during construction as it outlines the best possible placement of physical hardware and other resources in the center.

What Is Included in a Data Center Floor Plan?

The floor plan is an important part of the data center layout. Well-designed floor plan boosts the data centers’ cooling performance, simplifies installation, and reduces energy needs. Unfortunately, most data center floor plans are designed through incremental deployment that doesn’t follow a central plan. A data center floor plan influences the following:

- The power density of the data center

- The complexity of power and cooling distribution networks

- Achievable power density

- Electrical power usage of the data center

Below are a few tips to consider when designing a data center floor plan:

Balance Density with Capacity

“The more, the better” isn’t an applicable phrase when designing a data center. You should remember the tradeoff between space and power in data centers and consider your options keenly. If you are thinking of a dense server, ensure that you have enough budget. Note that a dense server requires more power and advanced cooling infrastructure. Designing a good floor plan allows you to figure this out beforehand.

Consider Unique Layouts

There is no specific rule that you should use old floor layouts. Your floor design should be based on specific organizational needs. If your company is growing exponentially, your data center needs will keep changing too. As such, old layouts may not be applicable. Browse through multiple layouts and find one that perfectly suits your facility.

Think About the Future

A data center design should be based on specific organizational needs. Therefore, while you may not need to install or replace some equipment yet, you might have to do so after a few years due to changing facility needs. Simply put, your data center should accommodate company needs several years in the future. This will ease expansion.

Floor Planning Sequence

A floor or system planning sequence outlines the flow of activity that transforms the initial idea into an installation plan. The floor planning sequence involves the following five tasks:

Determining IT Parameters

The floor plan begins with a general idea that prompts the company to change or increase its IT capabilities. From the idea, the data center’s capacity, growth plan, and criticality are then determined. Note that these three factors are characteristics of the IT function component of the data center and not the physical infrastructure supporting it. Since the infrastructure is the ultimate outcome of the planning sequence, these parameters guide the development and dictate the data centers’ physical infrastructure requirements.

Developing System Concept

This step uses the IT parameters as a foundation to formulate the general concept of data center physical infrastructure. The main goal is to develop a reference design that embodies the desired capacity, criticality, and scalability that supports future growth plans. However, with the diverse nature of these parameters, more than a thousand physical infrastructure systems can be drawn. Designers should pick a few “good” designs from this library.

Determining User Requirements

User requirements should include organizational needs that are specific to the project. This phase should collect and evaluate organizational needs to determine if they are valid or need some adjustments to avoid problems and reduce costs. User requirements can include key features, prevailing IT constraints, logistical constraints, target capacity, etc.

Generating Specifications

This step takes user requirements and translates them into detailed data center design. Specifications provide a baseline for rules that should be followed in the last step, creating a detailed design. Specifications can be:

- Standard specifications – these don’t vary from one project to another. They include regulatory compliance, workmanship, best practices, safety, etc.

- User specifications – define user-specific details of the project.

Generating a Detailed Design

This is the last step of the floor planning sequence that highlights:

- A detailed list of the components

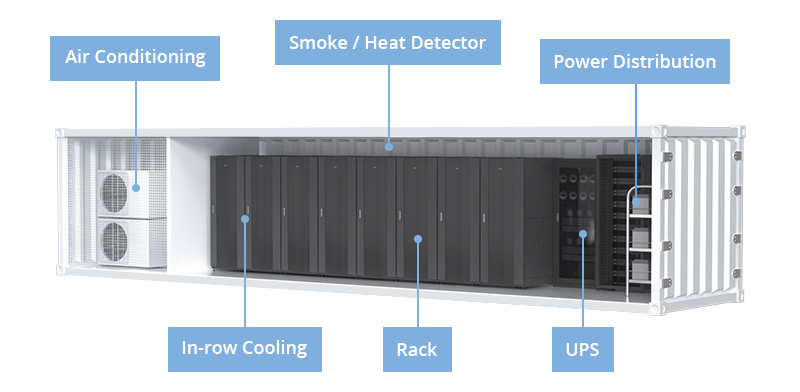

- Exact floor plan with racks, including power and cooling systems

- Clear installation instructions

- Project schedule

If the complete specifications are clear enough and robust, a detailed design can be automatically drawn. However, this requires input from professional engineers.

Principles of Equipment Layout

Datacenter infrastructure is the core of the entire IT architecture. Unfortunately, despite this importance, more than 70% of network downtime stems from physical layer problems, particularly cabling. Planning an effective data center infrastructure is crucial to the data center’s performance, scalability, and resiliency.

Nonetheless, keep the following principles in mind when designing equipment layout.

Control Airflow Using Hot-aisle/Cold-aisle Rack Layout

The principle of controlling airflow using a hot-aisle/cold-aisle rack layout is well defined in various documents, including the ASHRAE TC9.9 Mission Critical Facilities. This principle aims to maximize the separation of IT equipment exhaust air and fresh intake air by placing cold aisles where intakes are present and hot aisles where exhaust air is released. This reduces the amount of hot air drawn through the equipment’s air intake. Doing this allows data centers to achieve power densities of up to 100%.

Provide Safe and Convenient Access Ways

Besides being a legal requirement, providing safe and convenient access ways around data center equipment is common sense. The effectiveness of a data center depends on how row layouts can double up as aisles and access ways. Therefore, designers should factor in the impact of column locations. A column can take up three or more rack locations if it falls within the row of racks. This can obstruct the aisle and lead to the complete elimination of the row.

Align Equipment With Floor and Ceiling Tile Systems

Floor and ceiling tiling systems also play a role in air distribution systems. The floor grille should align with racks, especially in data centers with raised floor plans. Misaligning floor grids and racks can compromise airflow significantly.

You should also align the ceiling tile grid to the floor grid. As such, you shouldn’t design or install the floor until the equipment layout has been established.

Plan the Layout in Advance

The first stages of deploying data center equipment heavily determine subsequent stages and final equipment installation. Therefore, it is better to plan the entire data center floor layout beforehand.

How to Plan a Server Rack Installation

Server racks should be designed to allow easy and secure access to IT servers and networking devices. Whether you are installing new server racks or thinking of expanding, consider the following:

Rack Location

When choosing a rack for your data center, you should consider its location in the room. It should also leave enough space in the sides, front, rear, and top for easy access and airflow. As a rule of thumb, a server rack should occupy at least six standard floor tiles. Don’t install server racks and cabinets below or close to air conditioners to protect them from water damage in case of leakage.

Rack Layout

Rack density should be considered when determining the rack layout. More free space within server racks allows for more airflow. As such, you can leave enough vertical space between servers and IT devices to boost cooling. Since hot air rises, place heat-sensitive devices, such as UPS batteries, at the bottom of server racks, heavy devices should also be placed at the bottom.

Cable Layout

Well-planned rack layout is more than a work of art. Similarly, an excellent cable layout should leverage cable labeling and management techniques to ease the identification of power and network cables. Cables should have markings at both ends for easy identification. Avoid marking them in the middle. Your cable management system should also have provisions for future additions or removal.

Conclusion

Designing a data center layout is challenging for both small and established IT facilities. Building or upgrading data centers is often perceived to be intimidating and difficult. However, developing a detailed data center layout can ease everything. Remember that small changes in the plan during installation lead to costly consequences downstream.

Article Source: Data Center Layout

Related Articles: