High-Performance Computing (HPC) has become a crucial tool for solving complex problems and pushing the boundaries of scientific research, and various other applications. However, efficient operation of HPC systems requires specialized infrastructure and support. HPC has emerged as an indispensable tool across various domains, capable of addressing complex challenges and driving innovation in fields such as science, meteorology, finance, and healthcare.

Understanding the importance of data centers in supporting HPC is essential, as knowing the three fundamental components—compute, storage, and networking—that constitute high-performance computing systems is crucial.

Facilities in High-Performance Computing

Intensive computations in HPC environments generate substantial heat, necessitating advanced cooling solutions. Efficient cooling prevents overheating, ensuring system stability and prolonging hardware lifespan. Supporting HPC, data centers employ cutting-edge cooling facilities, including liquid cooling systems and precision air conditioning. Moreover, data center architects explore innovative cooling technologies like immersion cooling, submerging servers in special liquids for effective heat dissipation.

Success in HPC data centers relies on a range of specialized equipment tailored to meet the unique demands of high-performance computing. Key components include data center switches, server network cards, high-speed optical modules, DAC and AOC cables, and power supplies.

The Growing Demand for Network Infrastructure in High-Performance Computing

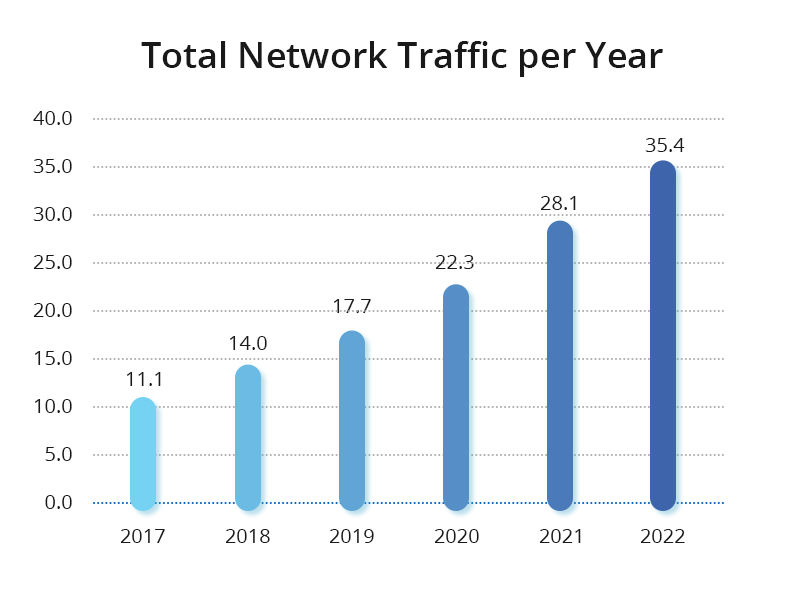

With revolutionary technologies like 5G, big data, and the Internet of Things (IoT) permeating various aspects of society, the trajectory towards an intelligent, digitized society over the next two to three decades is inevitable. Data center computing power has become a powerful driving force, shifting focus from resource scale to computational scale.

To meet the ever-growing demand for computing power, high-performance computing (HPC) has become a top priority, especially as computational cluster scales expand from the petascale to the exascale. This shift imposes increasingly higher demands on interconnect network performance, marking a clear trend of deep integration between computation and networking. HPC introduces different network performance requirements in three typical scenarios: loosely coupled computing scenarios, tightly coupled scenarios, and data-intensive computing scenarios.

In summary, high-performance computing (HPC) imposes stringent requirements on network throughput and latency. To meet these demands, the industry widely adopts Remote Direct Memory Access (RDMA) as an alternative to the TCP protocol to reduce latency and maximize CPU utilization on servers. Despite its advantages, the sensitivity of RDMA to network packet loss highlights the importance of lossless networks.

The Evolution of High-Performance Computing Networks

Traditional data center networks have historically adopted a multi-hop symmetric architecture based on Ethernet technology, relying on the TCP/IP protocol stack for transmission. However, despite over 30 years of development, Remote Direct Memory Access (RDMA) technology has gradually replaced TCP/IP, becoming the preferred protocol for HPC networks. Additionally, the choice of RDMA network layer protocols has evolved from expensive lossless networks based on the InfiniBand (IB) protocol to intelligent lossless networks based on Ethernet.

From TCP to RDMA

In traditional data centers, Ethernet technology and the TCP/IP protocol stack have been the norm for building multi-hop symmetric network architectures. However, due to two main limitations—latency issues and CPU utilization—the TCP/IP network is no longer sufficient to meet the demands of high-performance computing. To address these challenges, RDMA functionality has been introduced at the server side. RDMA is a direct memory access technology that enables data transfer directly between computer memories without involving the operating system, thus bypassing time-consuming processor operations. This approach achieves high bandwidth, low latency, and low resource utilization.

From IB to RoCE

RDMA enables direct data read and write between applications and network cards. RDMA’s zero-copy mechanism allows the receiving end to read data directly from the sending end’s memory, significantly reducing CPU burden and improving CPU efficiency. Currently, there are three choices for RDMA network layer protocols: InfiniBand, iWARP (Internet Wide Area RDMA Protocol), and RoCE (RDMA over Converged Ethernet). Although RoCE offers many advantages, its sensitivity to packet loss requires support from lossless Ethernet. This evolution of HPC networks reflects a continuous pursuit of enhanced performance, efficiency, and interoperability.

Enterprise Innovative Solution: Designing High-Performance Data Center Networks

The architecture of data center networks has evolved from the traditional core-aggregation-access model to the modern Spine-Leaf design. This approach fully utilizes network interconnection bandwidth, reduces multi-layer convergence rates, and is easy to scale. When traffic bottlenecks occur, horizontal expansion can be achieved by increasing uplink links and reducing convergence ratios, minimizing the impact on bandwidth expansion. Overlay networks utilize EVPN-VXLAN technology to achieve flexible network deployment and resource allocation.

This solution draws on the design experience of internet data center networks, adopting the Spine-Leaf architecture and EVPN-VXLAN technology to provide a versatile and scalable network infrastructure for upper-layer services. Production and office networks are isolated by domain firewalls and connected to office buildings, labs, and regional center exits. The core switches of the production network provide up to 1.6Tb/s of inter-POD communication bandwidth and 160G of high-speed network egress capacity, with each POD’s internal horizontal network capacity reaching 24Tb, ensuring minimal packet loss. The building wiring is planned based on the Spine-Leaf architecture, with each POD’s switches interconnected using 100G links and deployed in TOR mode. The overall network structure is more streamlined, improving cable deployment and management efficiency.

Future-Oriented Equipment Selection

When envisioning and building data center networks, careful consideration of technological advancements, industry trends, and operational costs over the next five years is crucial. The choice of network switches plays a vital role in the overall design of data center networks. Traditional large-scale network designs often opt for chassis-based equipment to enhance the overall capacity of the network system, but scalability is limited.

Therefore, for the network equipment selection of this project, NVIDIA strongly advocates for adopting a modular switch network architecture. This strategic approach facilitates rapid familiarization by maintenance teams. Additionally, it provides operational flexibility for future network architecture adjustments, equipment reuse, and maintenance replacements.

In response to the ongoing trend of business transformation and the surge in demand for big data, most data center network designs adopt the mature Spine-Leaf architecture, coupled with EVPN-VXLAN technology to achieve efficient network virtualization. This architectural approach ensures convenient high-bandwidth, low-latency network traffic, laying the foundation for scalability and flexibility.

How FS Can Help

FS is a professional provider of communication and high-speed network system solutions for network, data center, and telecommunications customers. Leveraging NVIDIA® InfiniBand switches, 100G/200G/400G/800G InfiniBand transceivers, and NVIDIA® InfiniBand adapters, FS offers customers a comprehensive set of solutions based on InfiniBand and lossless Ethernet (RoCE). These solutions meet diverse application requirements, enabling users to accelerate their businesses and enhance performance. For more information, please visit FS.COM.